Computers counting craters

By Anthony Milbourne (Birkbeck College)

In this blog I would like to talk about automated crater counting, which as the name suggests, is the use of computers to identify and count craters. Computers are very good (and getting better) at many things, but they are still not as good as humans at many important classes of problems. The human brain is amazingly powerful (you knew that) and the latest generation of super-computers have only just reached the same order of magnitude of computing power. In other words, there are currently only a handful of machines in the world that can truly rival the human brain in terms of raw processing-power. Humans are particularly good at pattern recognition and computer scientists have been trying to create computer programs to do this for a long time, but the results generally look rather pathetic compared to humans. One application of pattern recognition is crater identification, which I will talk about below.

In general there are two approaches to pattern recognition: designed algorithms and machine learning.

A designed algorithm is a set of very specific instructions that allow the computer to solve a specific problem. It would be like programming a car to drive from point A to point B by defining exactly how much to accelerate or brake at what points and exactly how much to turn the wheel when. If you know the car will always be running on the same road then this approach is reliable and predictable, but it’s not very flexible. If a pedestrian steps into the road they are in trouble because the car will take no account of the changed environment, and if the car has to go on a different road it will be close to useless.

In machine learning the computer is still given detailed instructions, not on how to solve a specific task, but on how to learn what works well to solve the task. This would be like giving the car-driving program instructions on what the brake, accelerator and steering wheel did and then letting it experiment until it found a route from A to B. Obviously, this requires a training phase, where the algorithm crashes a lot (let’s hope the car wasn’t expensive), but eventually it figures out some general principles about driving and is able to deal with a certain amount of change in its environment.

Designed algorithms are safe and predictable; they don’t need training and are often easier to implement and faster to run, but they are inflexible. Machine learning may be better at the job and will certainly be more flexible, but it is tricky to train and you may end up training it to take short-cuts that you didn’t want: http://neil.fraser.name/writing/tank/

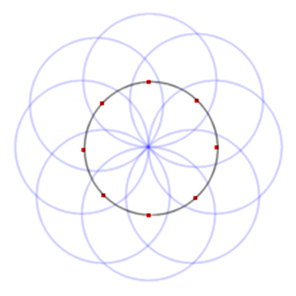

CHT circles: The points (in red) on the circle (in black) each create a ring of votes (in blue) around themselves. Where vote rings overlap the votes combine (more intense blue) and the greatest vote value indicates the centre of the circle. The radius of the vote rings is determined by the radius of the circle being searched for.

An example of a commonly used designed algorithm is the Circular Hough (pronounced like rough) Transform (CHT). We assume that the image is taken from above and that the vast majority of craters will be roughly circular. The program then uses a CHT to look for circular patterns of a set radius (perhaps repeating many times for different radii). A CHT essentially takes each image pixel that represents an edge and uses it to generate a vote for all possible circles that the edge could be on. The centres of all these possible image circles form a circle of votes around the edge point. If you do this for every edge point then the votes tend to build up at points that really are the centres of circles (it’s easier to see in the diagram).

Of course, this is much harder in practice as the images have a lot of noise and other features that generally confuse the algorithm, so various people have come up with various ways of improving it and making it faster. There are of course many other types of designed algorithm, but I won’t bore you with all of them.

An example of a machine learning approach is to use a neural network. This is a program that tries to simulate a simplified model of how the brain works. It consists of a large number of ‘nodes’ which are connected to each other by links of varying strength. The nodes are normally arranged in layers, and each node combines the inputs from nodes in the preceding layer and sends the result out to the nodes in the next layer. The nodes in the first layer act as input points and the nodes in the last layer as the output. The strength of the links can be varied in order to change the behaviour of the network, and this is done during training when the error from each training run is used to adjust the link strengths.

This is a very simple (too simple to be useful) example of a neural network. A set of values are passed to the input layer (in green) and an output is generated by the output layer (in purple). What happens in between is determined by the connection strengths, which are the result of training.

Neural networks are deceptively simple in concept but are very powerful and can end up spotting trends that are not clear to humans, or that are too complex or nuanced to implement easily as a designed algorithm. However the number of nodes needed to achieve anything useful is normally large, so figuring out what is actually happening inside the network is not practical. For this reason you can never be quite sure that the network, given new or unexpected data, won’t do something crazy!

Again, this is not the only way of implementing machine learning, but it gives an idea of the way this sort of system works – trained rather than designed.

In general, machine learning approaches take more processing power than designed algorithms, so in most cases a pipeline is used. First, a quicker, more predictable, designed algorithm is used to select areas of interest (potential craters), and then a machine learning approach is used to sort the real craters from the noise.

(TOP) The result of running an algorithm, based on the Hough Transform, on an HRSC image of Mars. The smooth terrain and crisp crater rims produce fairly good results, although there are still a few errors, some of which are glaringly obvious to a human. (Image: modified from HRSC/ESA). (BOTTOM) The result of running the same algorithm, on a tile from the Moon Zoo database. The degraded rims and noisy background confuse the algorithm which finds lots of craters, but almost all are in the wrong place! (Image: modified from NASA/GSFC/ASU)

(TOP) The result of running an algorithm, based on the Hough Transform, on an HRSC image of Mars. The smooth terrain and crisp crater rims produce fairly good results, although there are still a few errors, some of which are glaringly obvious to a human. (Image: modified from HRSC/ESA). (BOTTOM) The result of running the same algorithm, on a tile from the Moon Zoo database. The degraded rims and noisy background confuse the algorithm which finds lots of craters, but almost all are in the wrong place! (Image: modified from NASA/GSFC/ASU)

The images at left are an example of the kind of output that can be achieved by automated crater recognition (this one is based on Hough Transforms), and the problems with it. This is far from the best algorithm available, and other researchers have developed much more accurate programs, but they all suffer to a greater or lesser degree from image noise. The first image shows how accurate an algorithm can be in a clear image with little noise. It misses many smaller craters and there are a few false positives (which are somewhat surprising to a human eye), but in general it finds the rims of the most obvious craters very accurately. The second image features degraded crater rims, a lumpy surface and sub-optimal illumination. The result is that the same algorithm does very badly at spotting craters. This is not surprising; even a human would have to look harder at the second image, but the algorithm performs so badly that it is arguably not worth using, and this is the sort of image where humans really are the only (reliable) show in town at the moment.You might think that automated crater counting would be a direct competitor to crowd sourcing efforts like Moon Zoo, and in some cases you would be correct, but it can also be used as a complementary technique. This could be done by using moon Zoo crater identifications as the areas of interest and then running an algorithm to find the exact location of the crater rim, or using an algorithm to spot Moon Zoo data which has been entered by mistake, or by users who didn’t understand the task.

Most interestingly, in my view, is the idea that algorithms are just another type of user. Some algorithms are not great at spotting craters, but some human users are a bit variable too. Admittedly, the best humans are far better than the best algorithms, but the best algorithms are probably better than the worst humans, so they fall within the quality spectrum that Moon Zoo (and the rest of the Zooniverse) already deals with. They probably won’t be much good at spotting unusual objects and they certainly won’t be much fun on the forums, but perhaps we might one day be working with algorithms as our (less able) peers.

By Anthony Milbourne (Birkbeck College)

Regarding “algorithms as peers”, you might check out the “Eyewire” project; a citizen science project where humans correct the efforts of a 3-D space-filling algorithm designed to map out neurons in retina samples. Basically the users connect the areas which the algorithm mistakenly left disconnected.